As a software engineer, I know extremist content can be curbed. After Christchurch, it’s more urgent than ever

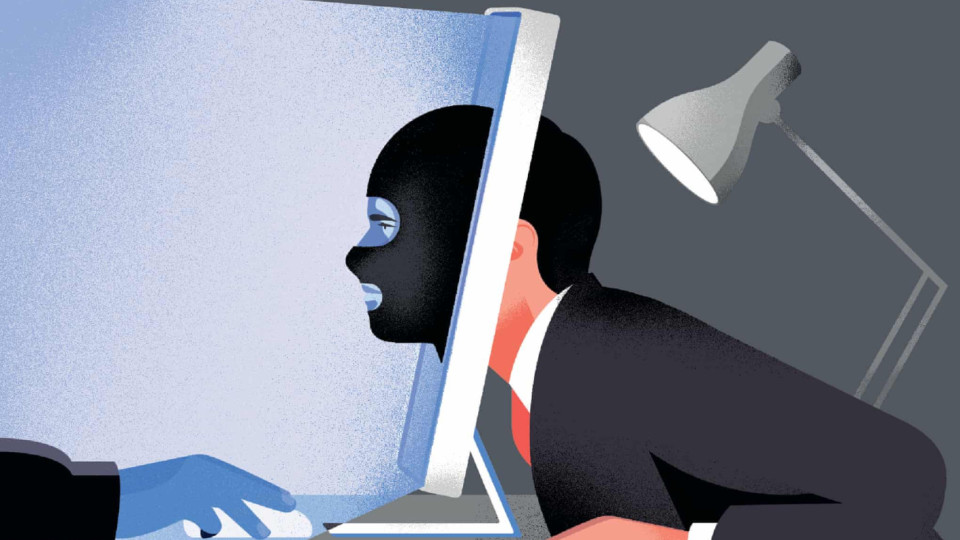

Like so many, I was shocked to the core by the recent killing of 50 Muslim worshippers in New Zealand. As I absorbed the news, my thoughts – for reasons I will shortly explain – turned to the technology that is so closely linked to the atrocity. And let me say this clearly: major platforms such as YouTube and Facebook are a primary and active component in the radicalisation of, mostly, young men.

These organisations counter that they aim to take down content that violates their rules swiftly, and are increasing resources for efforts to identify and remove dangerous material before it causes harm. But clearly this isn’t enough. And by not doing enough to police their platforms, they risk being complicit in innocent lives being violently cut short. It is within their power to remove extremist content and users from their platforms, and they’re failing to do so in any meaningful way. Crucially, this is not caused by insurmountable technical problems.

I write these words with a high degree of confidence, speaking as an experienced software engineer who has spent much of his career writing similar code for dozens of large companies. More importantly, though, I write them with a heartfelt and burning concern, as the brother of Martyn Hett, who was killed in the 2017 attack on the Manchester Arena at the hands of a young man who was radicalised in part by the content and people he connected to online.

Although the idea of people being fed abhorrent rhetoric and conspiracy theories is nothing new, what we have seen in the past decade is an absolute explosion in the ready availability of this content, and easy access to the networks behind them. Gone are the days of hostile mobilisation being organised in quiet meetings in the back rooms of pubs – intolerance has changed with the times. Platforms such as Facebook and YouTube are carefully engineered to ensure you reach content and people you will find interesting. Of course, this is great if you’re into cars or Star Trek. But, as we’ve seen so clearly, the same precision-engineered recommendations work just as well if you’re into the rightwing extremist content linked to the Christchurch atrocity, or the anti-vaccine propaganda that’s contributing to children succumbing to easily preventable diseases.

YouTube in particular is extraordinary in how it continually recommends what it decides is relevant content. As an example, I’m an established antiracism campaigner with nothing in my search history to indicate an interest in flat-Earth beliefs or Sandy Hook conspiracy theories. Despite this disconnect, and with many years of my browsing data on file, YouTube is happy to continually show me this content.

Now, imagine instead I’m a disaffected and potentially vulnerable young man with access to semi-automatic weapons, or contacts and influences that could feasibly help me construct an explosive device. For such a person, that constant barrage of hateful media and disinformation could begin to sound interesting, then relatable, and eventually trustworthy. Consider too that this user-generated content goes hand in hand with the intolerance and normalised hatred we’re seeing in the mainstream media. The Christchurch suspect was described on the front page of the Daily Mirror as an “angelic boy who grew into a killer”, in direct contrast to its “Isis maniac” description of the jihadist extremist who killed a similar number in Orlando. With this double standard so prominent in traditional publications, the most extreme user-generated rhetoric gains an implied legitimacy.

I’m often asked: what can the platforms do? There’s so much content, and moderating is so difficult, right? Wrong. I’m not having this for a second. I will say this with confidence: the platforms can do a whole lot, but aren’t doing it. These networks are built and staffed by some of the finest minds in modern software engineering. I know this because I’ve spent the past decade sitting in audiences at conferences listening to these people show off their impressive tech.

Here’s an example: YouTube is comfortably capable of sifting through its billions of videos and picking up a minuscule snippet of music amid the noise, correctly identifying it and tagging it for licensing. Of course, it was compelled to do this because of money; and what’s more, it has had this capability for a decade now. Now consider the wealth of information available to YouTube before you even look at the content of the video itself: titles, descriptions, tags, users, playlists, likes and dislikes. Hobbyist computer programmers regularly experiment with this to do interesting things in just a few days, or a few hours even – this is rudimentary data-wrangling, not rocket science. A step up from that is to begin looking at the contents of the video itself: we’re in a golden age of advancements in machine learning and AI, and yet apparently none of this is currently being used to put the house in order. More succinctly: if anyone at YouTube (or any other platform) goes on record to say that catching a lot of this stuff isn’t possible, I would be deeply sceptical.

Can we ever realistically catch everything at this scale? Of course not, but I’m wholly convinced that a lot can be caught, blocked, banned, eradicated. Every extremist post and video that a vulnerable young person doesn’t see is another small victory for us all. And of course, identifying content identifies users, and identifying users identifies networks. There’s no instant fix, but reducing it makes us safer. And for those who cry censorship, nobody’s free speech is being violated. Freedom of speech doesn’t mean that all speech is welcome, nor does it protect you from someone showing you the door. There’s a suggestion that removing this content from major platforms will simply send users elsewhere, but that misses the point: yes there are plenty of disparate and growing “elsewheres” (the Christchurch suspect was a member of notorious internet cesspool 8chan), but these pale in comparison to the algorithmically driven recommendation engines that point to a monstrous library of content and networks of people. The average at-risk young person isn’t logging on to the dark web or frequenting relatively obscure, impenetrable forums, they’re trawling YouTube.

Enough is enough. Our communities are under siege. Despite the clear evidence that these enormous platforms are the key frameworks upon which a wealth of toxic online cultures are built, nothing tangible is changing. I am calling them out, and we as a society have an urgent imperative to do the same.

The Guardian

Leave a comment